XCP-ng or Proxmox

After using ESXi for almost a decade, and around 3 for Snakeoil development, I decided it's time to move on to other hypervisors with my new Ryzen server.

I want dedicated hypervisors running on bare metal, so the two leading contenders are XCP-ng and Proxmox VE . At the time I tried searching online but just could not find a definitive answer for my use case.

In the end I decided to go with XCP-ng first and see how it goes. After about 5 months of use, I decided to try out Proxmox VE. Here's my experience so far with XCP-ng and now PVE.

Hardware System

It's prudent to describe the machines I am going to use for the hypervisor, so the setup is as follows:

| Machine | Description |

|---|---|

| Main Server |

AMD (R) Ryzen(R) 3600

64 GB of DDR4 3200 MHz RAM

250 GB NVMe

2 port SFP+ @ 10 Gbps

|

| Secondary Server |

Intel(R) Xeon(R) CPU E3-1230 v3 (4 Cores + HT)

32 GB of DDR3 ECC

6x 2TB Hitachi

6 port Ethernet @ 1 Gbps

|

| Tertiary Server |

Intel(R) Celeron(R) CPU 3865U

16 GB RAM (Sodimm)

250 WD HDD

6 port Ethernet @ 1 Gbps

|

| NAS |

Intel(R) Xeon(R) CPU E31260L @ 2.40GHz

16 GB of DDR3 ECC 4x 8TB, 60 GB Cache (SSD), 250 ZLOG (SSD)

|

The network switch is Unifi 48 (Non POE).

All virtual machines (VM) will all be running on the Main Server, the secondary server will only be powered up if needed. All VM disks are stored on storage local to the server, with backups saved on the NAS.

The main server has a dedicated 10 Gbps connection to the NAS, while the two backup machines have 4x 1Gbps Intel networking back to the switch.

XCP-ng

The motherboard and Ryzen I am using was relatively new at the time, but XCP-ng boots, installs and works just fine on the Ryzen CPU straight away. The new 570 chipset means there's no sensors support at all in the kernel, but that will resolve itself over time.

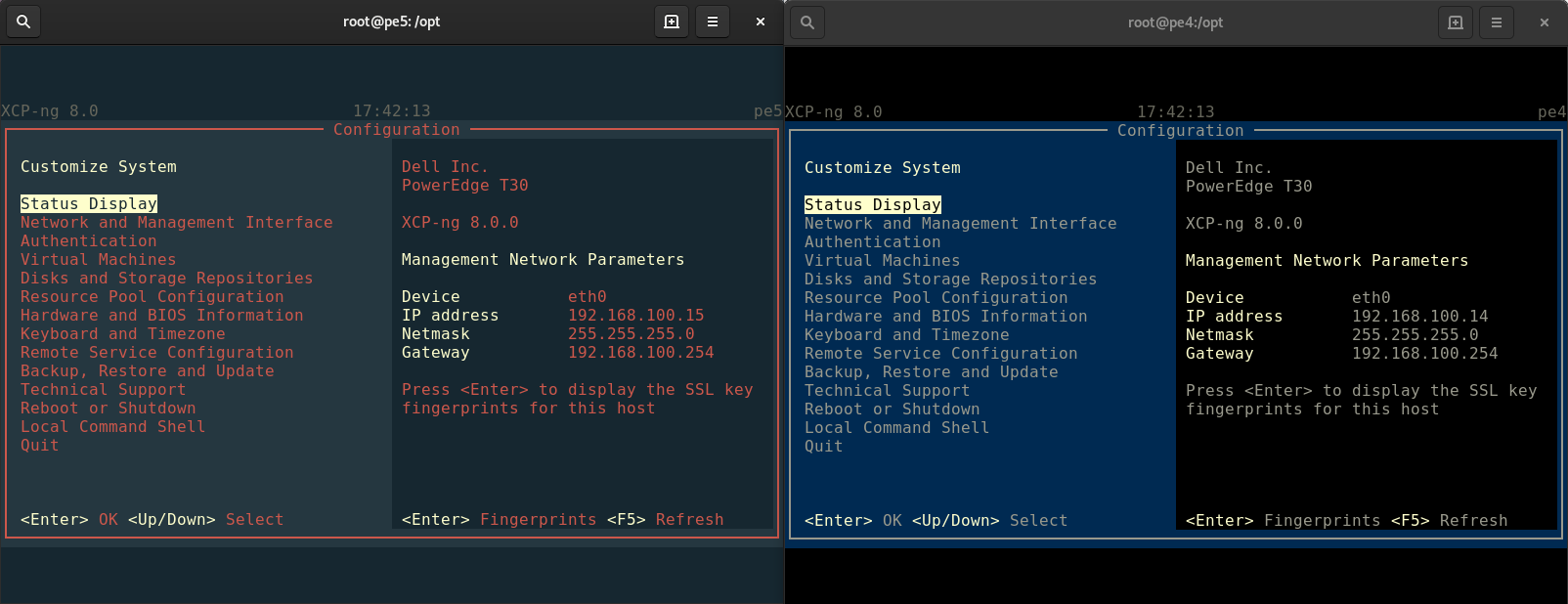

XCP-ng is pretty comfortable to use and it felt almost ESXi like to me with a very nice console for me to start-up/shutdown VMs or the host itself.

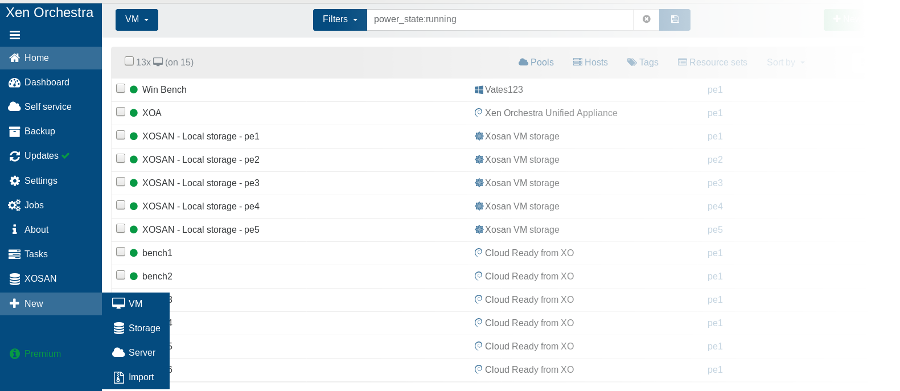

And like ESXi, I need to install an additional VM for cluster management (VCenter). You can run XCP-ng stand-alone, but adding xen-orchestra (especially the free edition) really brings the whole suite to enterprise grade.

Configuration of auto start/stop of VM is confusing. For some reason it takes me multiple reboots to get this right.

The VM seems fast and responsive. But there are times when things doesn't seem to be as responsive. My biggest gripe is that network throughput can be hit and miss, running untangle (acting as my inter-VLAN router and security scanner) as a VM basically killed the network.

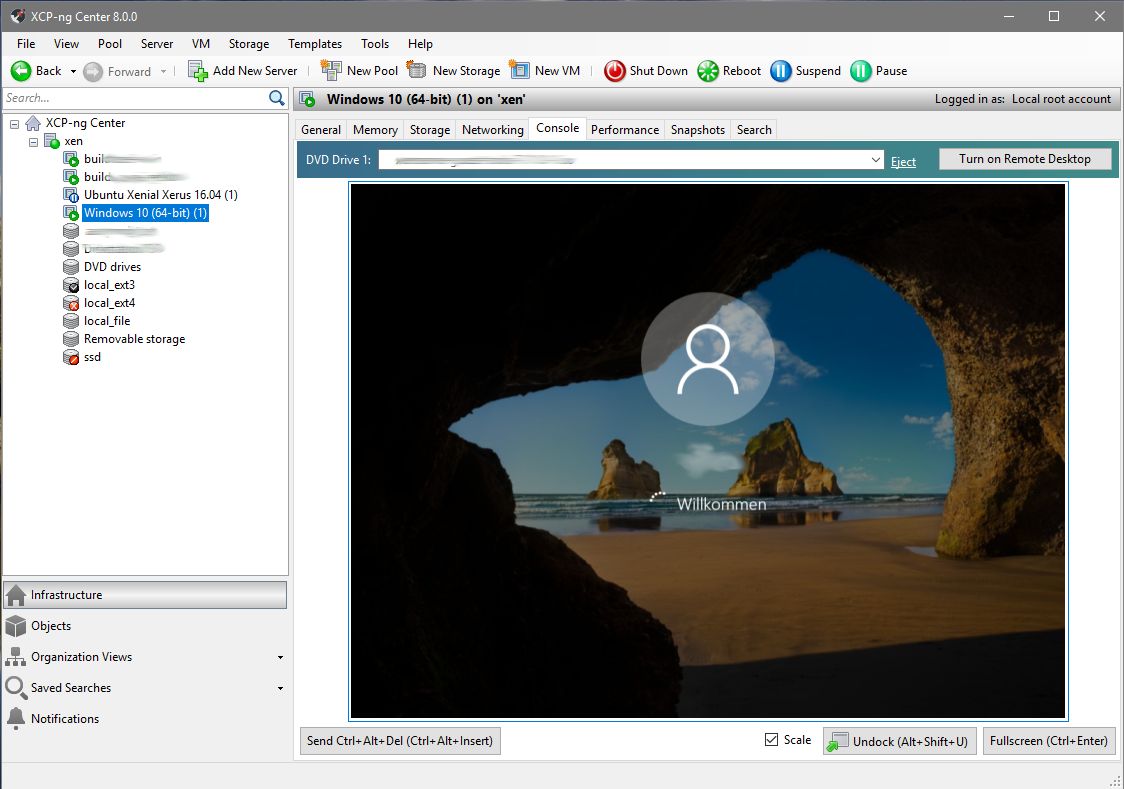

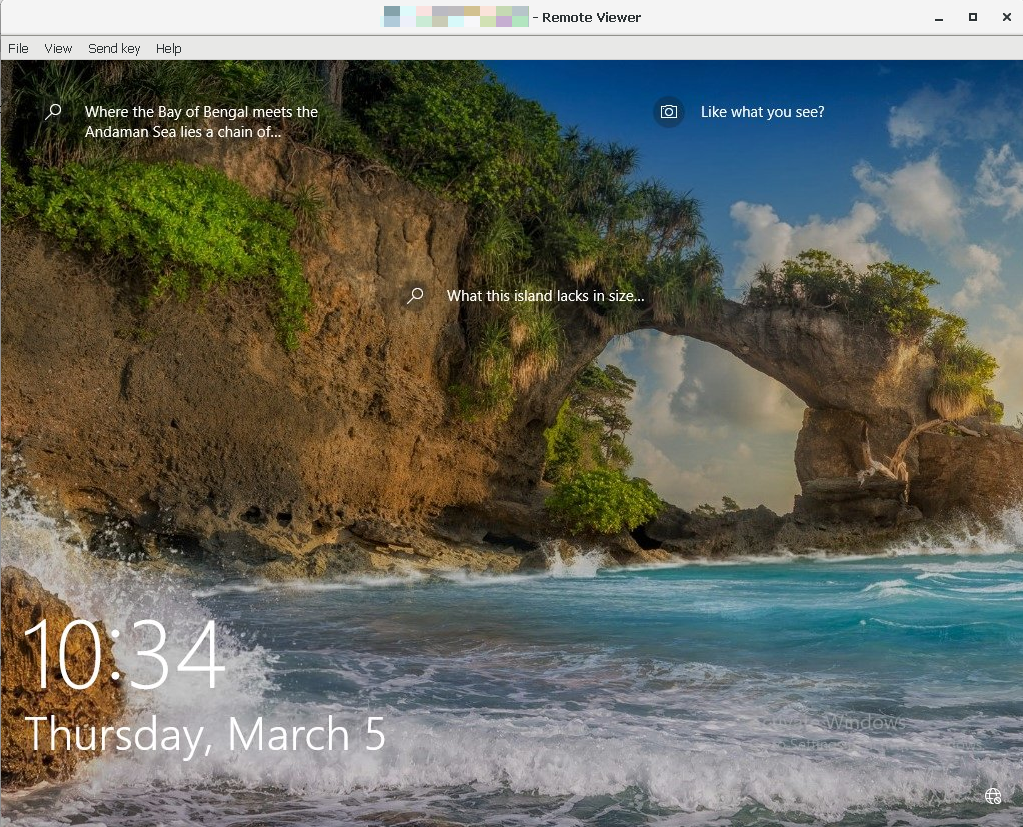

Administration wise, you can use xen-orchestra, or xcp-ng center for Windows. There is nothing else as far as I know. Not that big a deal if you don't need console access to the VMs, but a pain in the ass when you have to. When I VPN back into the home network, loading up a Windows GM, the graphics over my slow 50/20 connection is pretty laggy.

There is also no audio support. Sure you can setup RDP and get audio that well. But what about Linux machines then? Especially troublesome for Snakeoil development, I have to buy a cheap USB audio device and pass through this to the VM. This configuration makes it harder for me to migrate the VM around the 3 servers because the USB audio device is tied to the server host, and not the computer I'm working on.

I like to keep my software updated. XCP-ng has released several updates in my short months of use. Unfortunately, I had to reboot the server each and every time. Sure this is not a problem if I am running a cluster of the same CPU types. Not in my case (Ryzen, Xeon and Celeron), I cannot live migrate VMs. This means I have to shut down all my VMs, reboot the host and power up the VMs. So an update will incur downtime in my setup.

These are my main gripes. At the end of the day I reckon XCP-ng is more suited to run headless VMs - machines where all you need to do is SSH in. This software is not suited if you need to run VMs with access to GUI console.

If you don't have a Windows machine, using XCP-ng can really be a pain. Sure you can do almost everything in Xen Orchestra, or you can run xcp-center under Windows emulation. At the end of the day, you have to use both XOA and XCP-center together. Till this day I have no idea how to change a network MTU from the web interface. There are things you can configure only in xcp-center (MTU), and things you can only configure in Xen Orchestra (e.g. backups).

Where it's more enterprise focused, it's still lacking pieces to complete the whole picture. Something I'm sure will be addressed in time.

Proxmox

Most Ryzen motherboard don't have a remote console, so I'm using a Silverstone case with a built in LCD monitor. I also have a small 10" LCD monitor as a backup. Unfortunately both these monitors fails to work when I tried to install Proxmox. The Proxmox install must be running in full 1920x1080 or higher resolution, something that isn't supported by my monitors. After an hour trying to coax a working VGA mode, I decided to just lug my FHD 16:9 monitor and connect that straight to the server instead. In my opinion Proxmox should use a standard 800x600 resolution when running it's GUI install, or better yet, have a text mode install!

Anyway, unlike XCP-ng, Proxmox just boots into a normal text console. Luckily this text console works on my built in LCD.

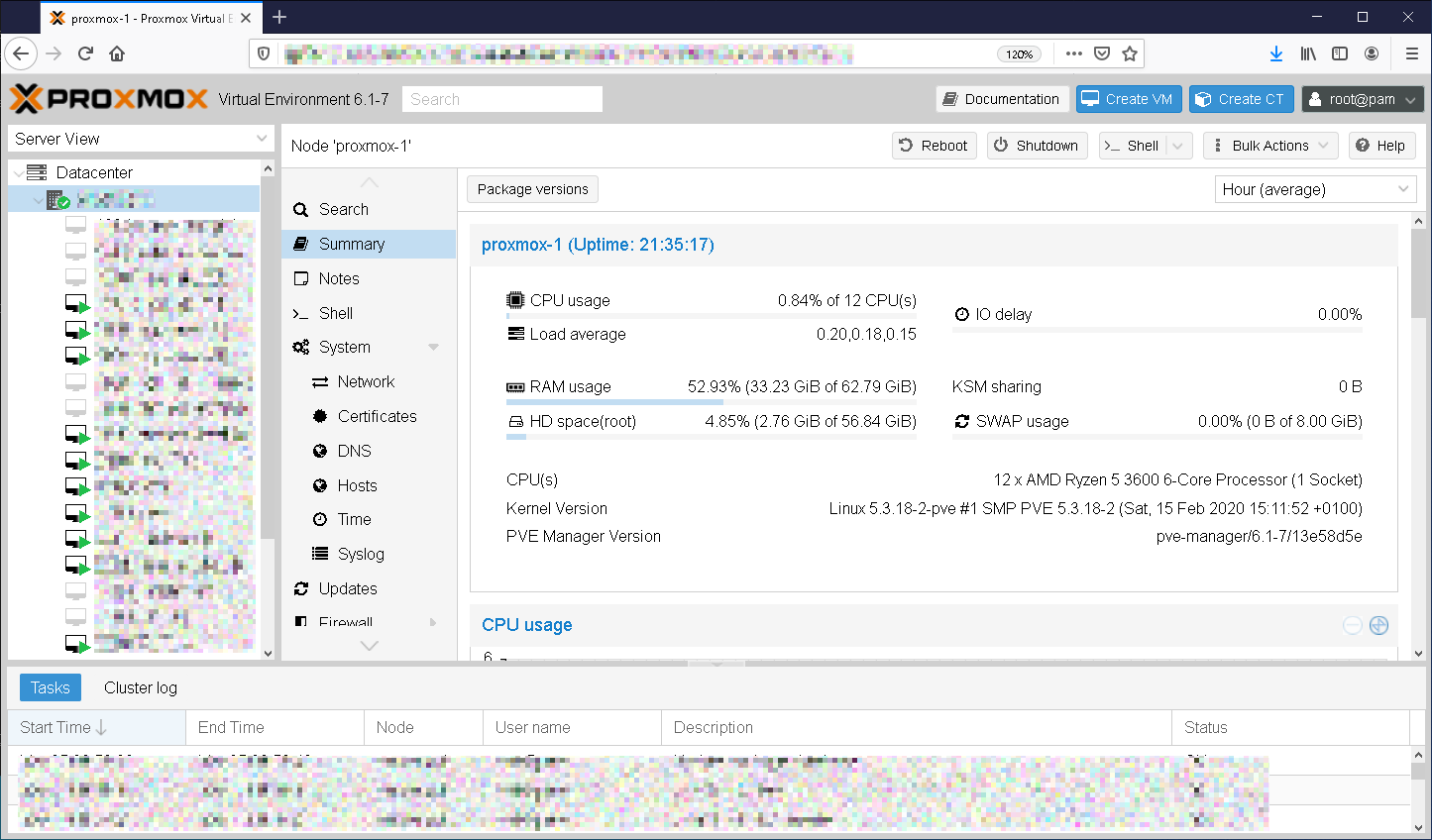

Glad to know the kernel (5.3.18-2-pve) support some sensors on my motherboard (default XCP-ng kernel detected none).

Now it's time for configuration. Although there's lots of documentation (there's a bundled HTML, PDF admin guide, and a Wiki). There are gaping holes and require a lot of digging to find out how to configure things a certain way. As an example, how do I setup a open vswitch with jumbo frames?

Any configuration changes to Open VSwitch will require a reset to take effect. Other than rebooting to get a new kernel running, I do not recall doing anything like this in the Linux ecosystem, ever. Sure this is very common in Windows administration, but this is certainly a first for me in Linux.

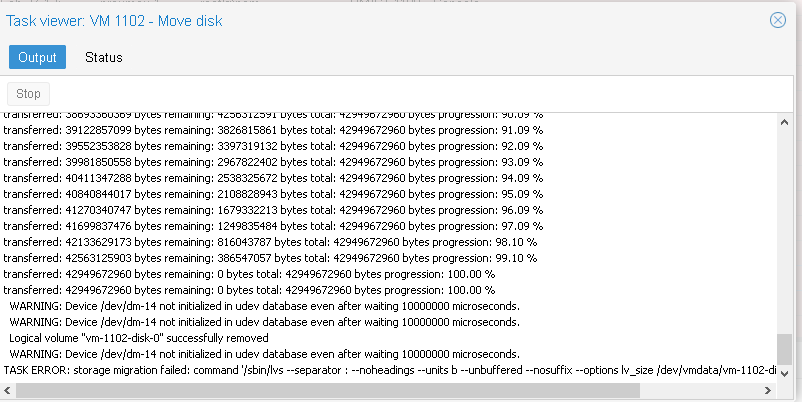

Then there are weird LVM errors like this:

I gave up on HDD and move on to a SSD, and this problem has just gone away.

Backups on XOA is impressive. This feature appears less impressive in Proxmox. Although the times to perform a full backing of a VM is similar, Proxmox can only perform full backups and not incremental backups like XOA. On XCP-ng, my backup strategy can be 1 full backup on Sunday, and incremental backups every day else. This strategy allow me to restore the VM to any day of the week. This cannot be done in Proxmox. I had to do full backup every day to accomplish the same outcome (Extra costs of additional storage space and I/O).

There is also a very big problem with I/O. When restoring backups (or migrating storage VMs), the process is so I/O intensive it literally kill any VM running on the same local storage (I am using LVM thin provision). During the course of evaluating the two hypervisors, I had to perform multiple restorations, and I do not recall my other VMs to freeze when doing a restoration on XCP-ng. But restoring a backup will cause problems when I'm in Promox.

Audio is supported in VMs. Now this is probably unimportant to almost everybody, but it's really an important feature to have for Snakeoil development.

Proxmox offers two ways to access your console - SPICE or VNC. For graphical environments I prefer SPICE, it is a pleasure to use. The console experience of Proxmox is certainly a lot better than XCP-ng and I do not experiment the same latency problems with GUI desktops on my slow Internet connection. SPICE and VNC are supported across Mac and other OSes so one is not tied down to Windows. This is a big win over XCP-ng.

Web administration is integrated so you do not need to install an additional VM for this. Cluster works fine (Tip: Setup your cluster before you create your VMs). This is the only tool you need to administer your cluster as everything can be performed from this one web app (the only time I go into the CLI is when I need to manually kill a running VM).

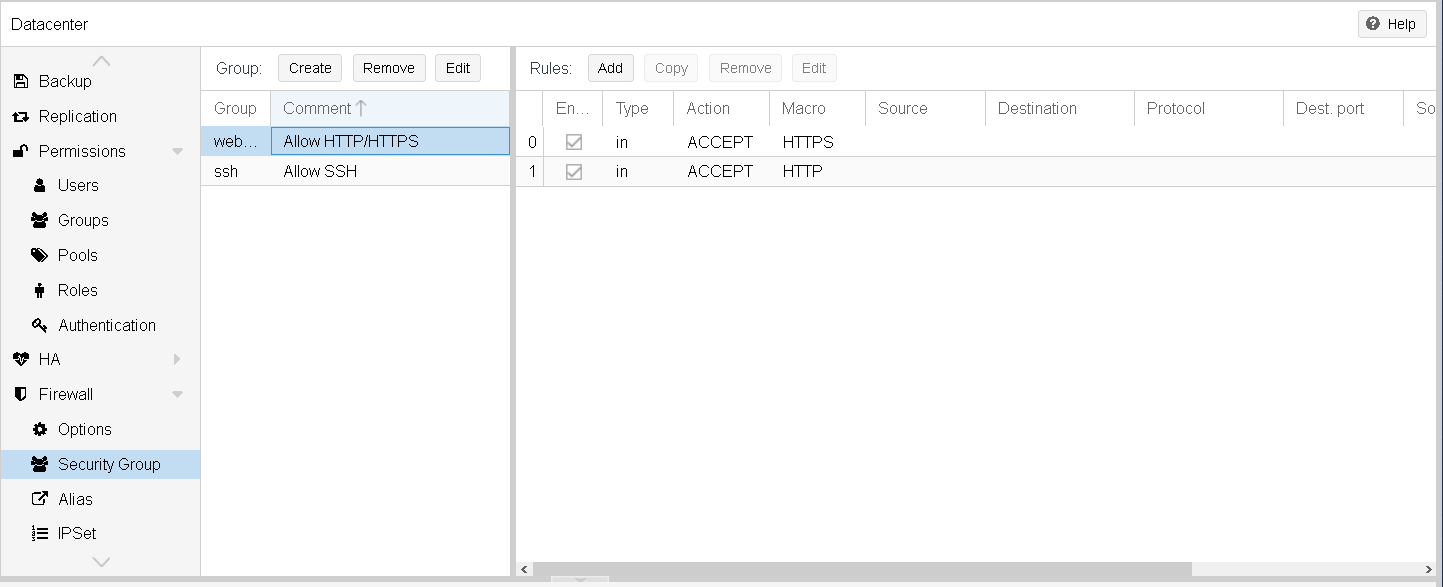

The second best feature of Proxmox is firewall. You can set this up on the Datacenter level, the Host level, and down to each VM. This is still something I'm struggling to perfect and I still not entirely sure if I understand the implementation correctly (remember the gaping holes in the documentation? This is one of them). I'm still trying to resolve a situation where a firewalled off VM cannot communicate with another firewalled VM. It is still at the early stage but I hope to eventually crack this soon.

What is the best feature then? For the most part, it's possible for me to live migrate VM across different CPUs. The default virtual CPU is KVM64, and so far the instruction set of this virtual CPU is common between my Ryzen and the Xeon. This is an excellent feature to have if you're running a mixed host environment. I know this is not recommended, but sometimes it is what it is.

Unlike XCP-ng, Proxmox is equally suited for both console only VM and graphical VMs. You can also run containers (I assume docker?) directly without a VM first (I'm still undecided if this is a good thing or not).

Summary

There is no clear winner here. There are breaking features on both platforms so it's a matter of choosing your poison. Promox is the clear winner for certain things (integration, GUI VM), while XCP-ng is champion on others (Backup).

With the exception of backup and disk migration, every other feature I use is just better on Proxmox. However XCP-ng do not suffer from the I/O of death of when performing I/O intensive tasks like disk migration and VM restores. This is a breaking feature (unless you also SSH into your host and ionice every time you do this).

Having a host firewall can be useful, but I have yet to get this working the way I want it (not entirely sure if this is user error or a bug). I reckon one can setup IP tables on XCP-ng, or even internally on the VMs to get similar results.

Memory usage Proxmox may be more aggressive than XCP-ng, but this is still early stage for me.

| XCP-ng | Pro |

Xen Orchestra is fantastic Flexible backup options Console GUI Intutive, no need to refer to documentation |

| Con |

No audio emulation Problems with occasional network throughput Not suitable if you run a lot of graphical VMs, or in mixed host environment Needs both XCP-ng and Xen Orchestra for full administration |

|

| Proxmox | Pro |

Live migration across hosts with different CPUs Integrated web interface Good stable performance, no known network throughput issues AFAICT. Excellent GUI experience via SPICE No need to reboot after an update (unless it is a kernel update) Supports audio emulation (ICH9 Intel HDA, Intel HDA, AC97) Built in firewall Container (LXC) support |

| Con |

Make sure you have a FHD screen for installation Do not like to number my VMs Lots of got'chas for first time users Cannot configure Open VSwitch without a reboot No qemu guest agent port to FreeBSD (only experimental at this stage) I/O problems when restoring backups or migrating disks on local storage |

For the home lab, go with Proxmox. The call is harder to make for businesses, it will have to depend on the use case. In my opinion both solutions are viable alternatives to VMWare ESXi, especially if you are running older hardware.

Comments

Thanks. I was wondering…

Thanks. I was wondering which one to use and your article helped me a lot.

Add new comment